Notochord

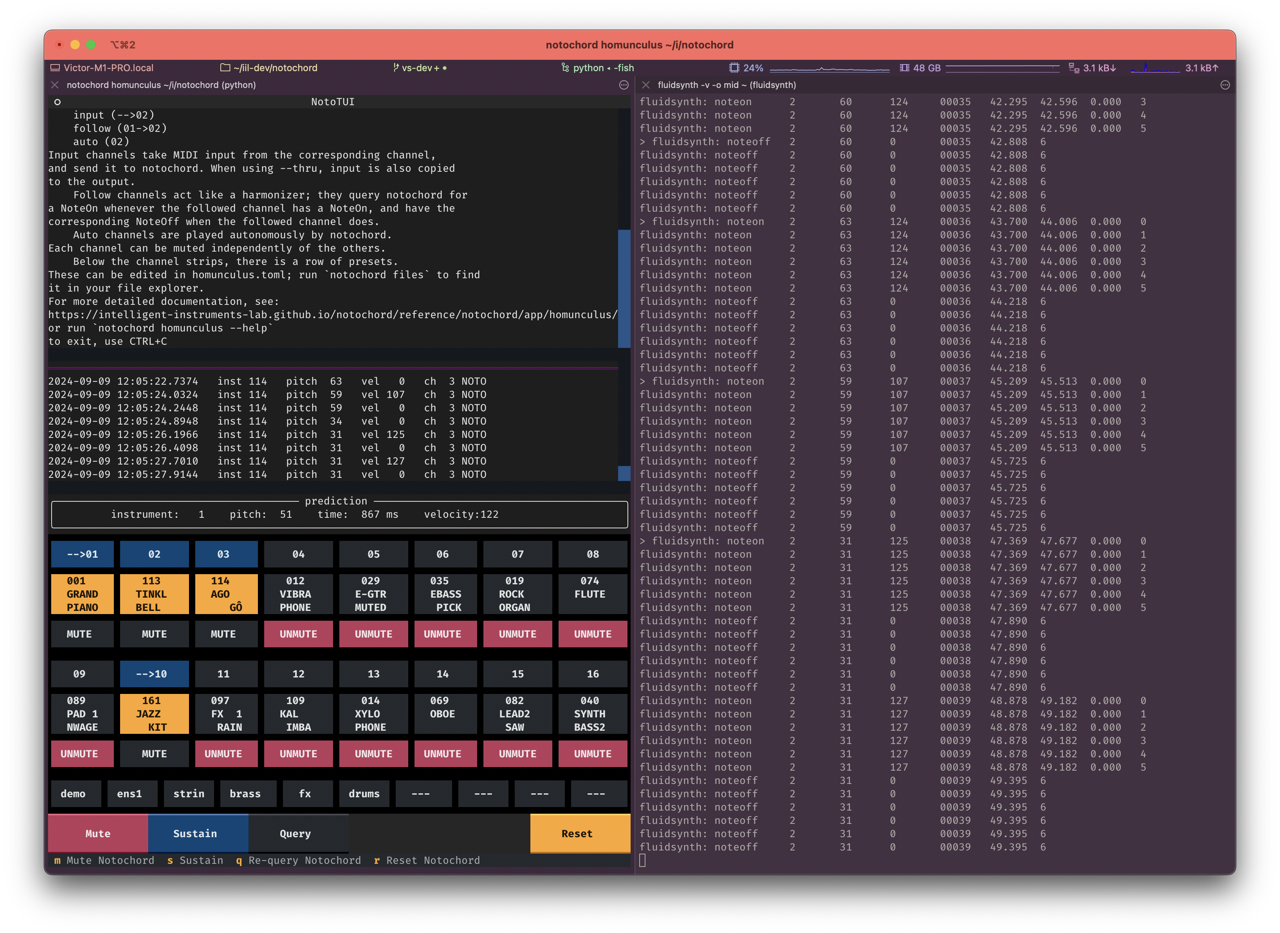

Using the notochord homunculus terminal app with fluidsynth.

Python Package Documentation | GitHub Repository

Notochord is a machine learning model for MIDI data: once trained on a corpus of MIDI files, it can generate music. What makes Notochord interesting compared to similar MIDI models (like Magenta’s PerformanceRNN or the Metacreation Lab’s MMM) is that it can generate one event at a time with very little delay, while allowing precise interventions into the note, timing, velocity, and instrumentation of each event. This makes Notochord a kind of software backbone for building intelligent MIDI instruments which you can touch with your hands. An application can query Notochord to provide accompaniment, harmonization, AI improvisation, or weirder forms of MIDI mangling – see our videos for examples:

ICMC 2025 demo paper on Notochord Homunculus

PDF linkdemo video:

Original AIMC 2022 Paper

Zenodo link

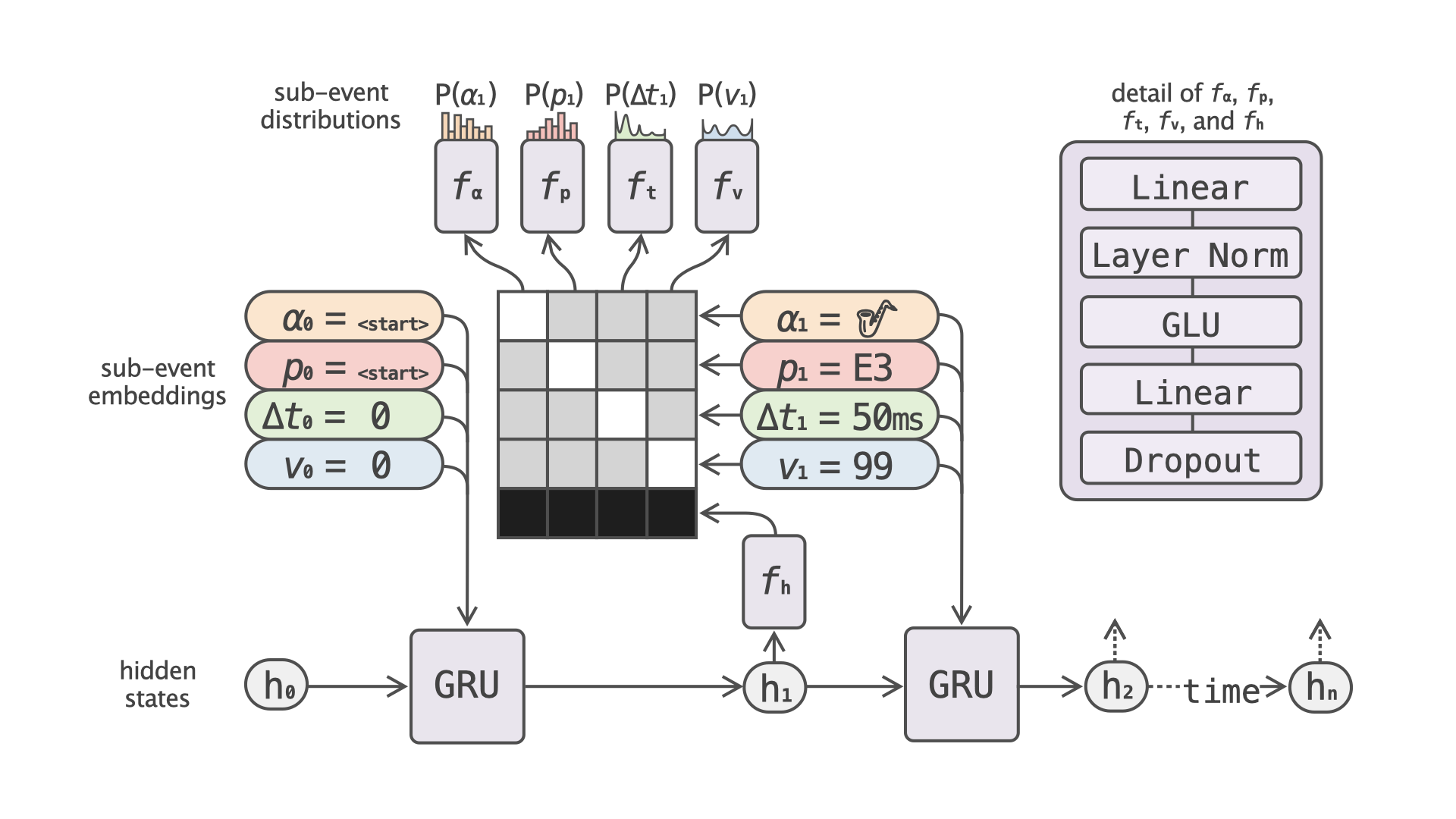

Architecture of the Notochord model (from the paper).