Workshop on Embedded AI at NIME 2022

Tue May 03 2022

We are welcoming submissions for the workshop Embedded AI for NIME: Challenges and Opportunities (https://embedded-ai-for-nime.github.io/) that will take place during the (online) NIME 2022 Conference (28th June - 1st July 2022).

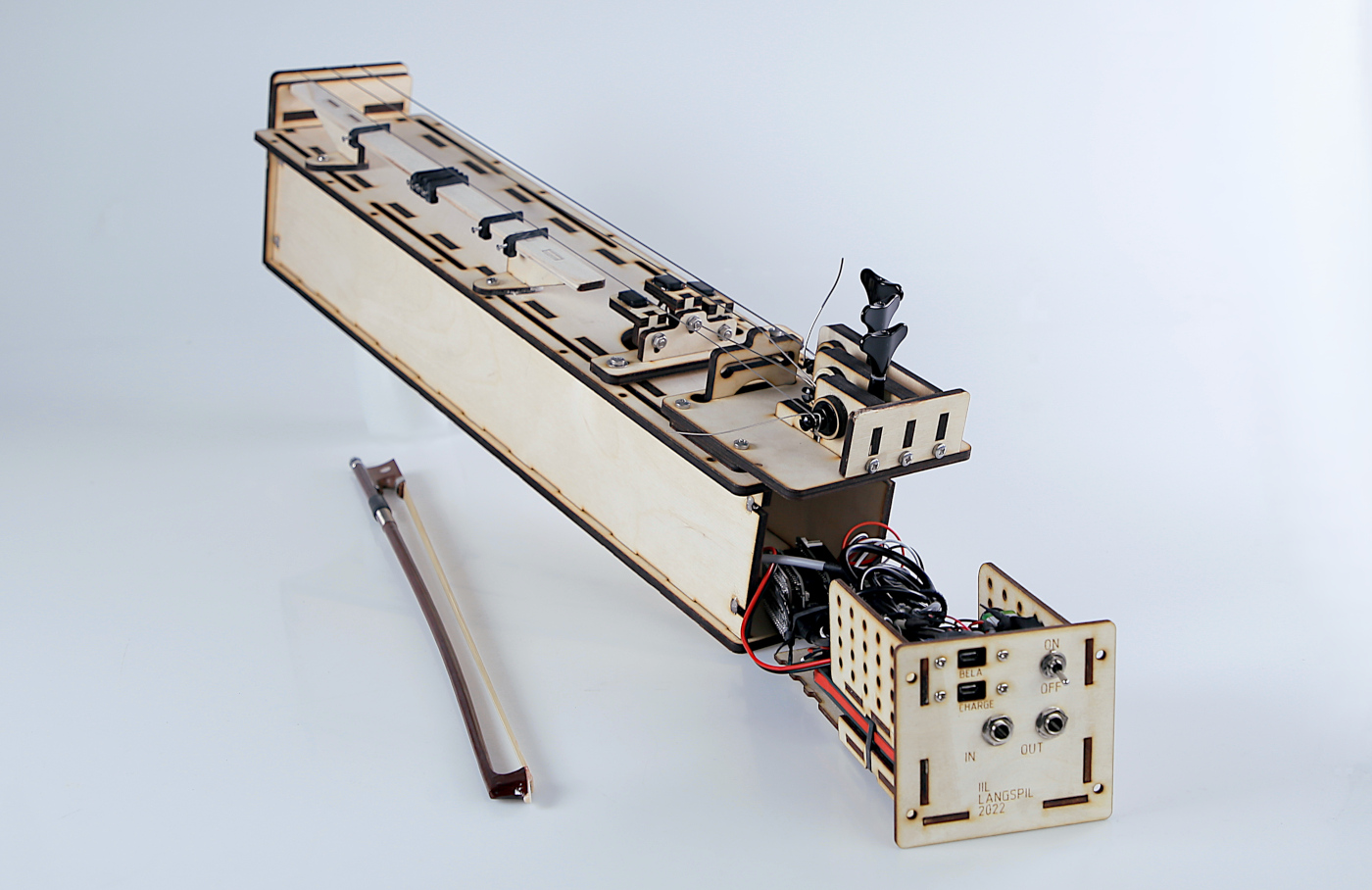

The Intelligent Instrument Lab's proto-langspil instrument with a Bela inside.

Deadline

3 June 2022 Anywhere on earth (AoE) UTC-12

Abstract

Despite recent advancements in low-resource computing hardware, such as microcontrollers or single board computers, the deployment of machine learning or symbolic artificial intelligence (AI) techniques still presents several technical challenges and higher-level design constraints. With this workshop, we aim to: (1) bring together a body of research practitioners that face such challenges in the context of NIME, (2) articulate these challenges and identify the tools and strategies being currently used to overcome them, (3) forge a community of practitioners of embedded AI for NIME and (4) discuss critical approaches on the use of embedded AI for musical expression.

Embedded AI for NIME

Cutting edge embedded systems have always been a part of NIME’s practices. Low-resource computing hardware, such as microcontrollers or single-board computers, can be embedded into digital musical instruments or interfaces to perform specific functions such as real-time digital signal processing of sensor data and sound. Simultaneously, an interest in exploiting the creative potentials of artificial intelligence (AI) for instrument design and musical expression has been growing within the NIME community in the past years. Recent advancements in embedded computing have allowed for faster and more intensive computation capabilities. However, the deployment of machine learning or symbolic AI techniques still presents several technical challenges (e.g., data bandwidth, memory handling) and higher-level design constraints. Some of these challenges are general to embedded systems, while others are specific to musical interaction, particularly questions regarding real-time performance and latency. Deploying AI models on embedded systems is an emerging and fast-changing field. A workshop is an excellent opportunity for practitioners to present works in progress and collaboratively identify shared challenges. We expect this workshop to serve as the starting point for an embedded AI NIME community and as future reference to help researchers get started with embedded AI.

Submissions

The workshop will run as part of the NIME 2022 conference, and participants will need to be registered in NIME (https://nime2022.org/registration.html, scholarships are available here: https://auckland.au1.qualtrics.com/jfe/form/SV_efCpUL21iYGZFKC). Presenters will give a 10-minute talk. After each set of 2-3 talks, we will have a panel discussion with presenters, organisers and attendees. We aim to accommodate participants in different time zones by running two sessions spaced 5-7 hours apart. We are welcoming proposal submissions in the form of extended abstracts. The extended abstract should describe the format of the talk, briefly summarise its contents, and explain its relevance to the workshop. Successful submissions’ abstracts will be published in online proceeding (participants will have the opportunity to edit the abstract for the camera-ready version). The proposal can be for a demo, a poster presentation, a progress report, a short paper presentation… anything of relevance to the topic of Embedded AI for NIME that fits in 10 minutes. The proposal should have an extension of 400-600 words and can be submitted here.

Topics include, but are not limited to:

- Any technical prototype or concrete implementation of resource-constrained systems using AI in the context of NIME

- Design strategies and conceptual frameworks for embedded AI

- Interaction paradigms for systems using embedded AI

- Embedded and real-time neural audio synthesis methods (e.g., neural, artificial life, statistical methods, etc.)

- AR/MR/VR systems using AI in the context of NIME

- Mobile computing systems using AI in the context of NIME

- Musical uses of AI in embedded platforms

- Workflows for improving AI implementations between laptop, embedded/real-time and HPC platforms

- Development environments for interactive machine learning in embedded context, particularly those targeting non-expert users

- Values, biases, ethical and philosophical issues with embedded AI in musical performance

- Inclusivity and diversity in emerging embedded AI communities

Organisers

Augmented Instruments Lab, Queen Mary University of London: Teresa Pelinski, Franco Santiago Caspe, Adán L Benito Temprano, Andrew McPherson

Intelligent Instruments Lab, Iceland University of the Arts: Victor Shepardson, Jack Armitage, Thor Magnusson

Experimental Music Technologies Lab, University of Sussex: Steve Symons, Chris Kiefer

Creative Computing Institute, University of the Arts London: Rebecca Fiebrink

For additional information or questions, you can contact Teresa Pelinski (t.pelinskiramos@qmul.ac.uk).